A self-study map of modern Machine learning

October 1, 2016

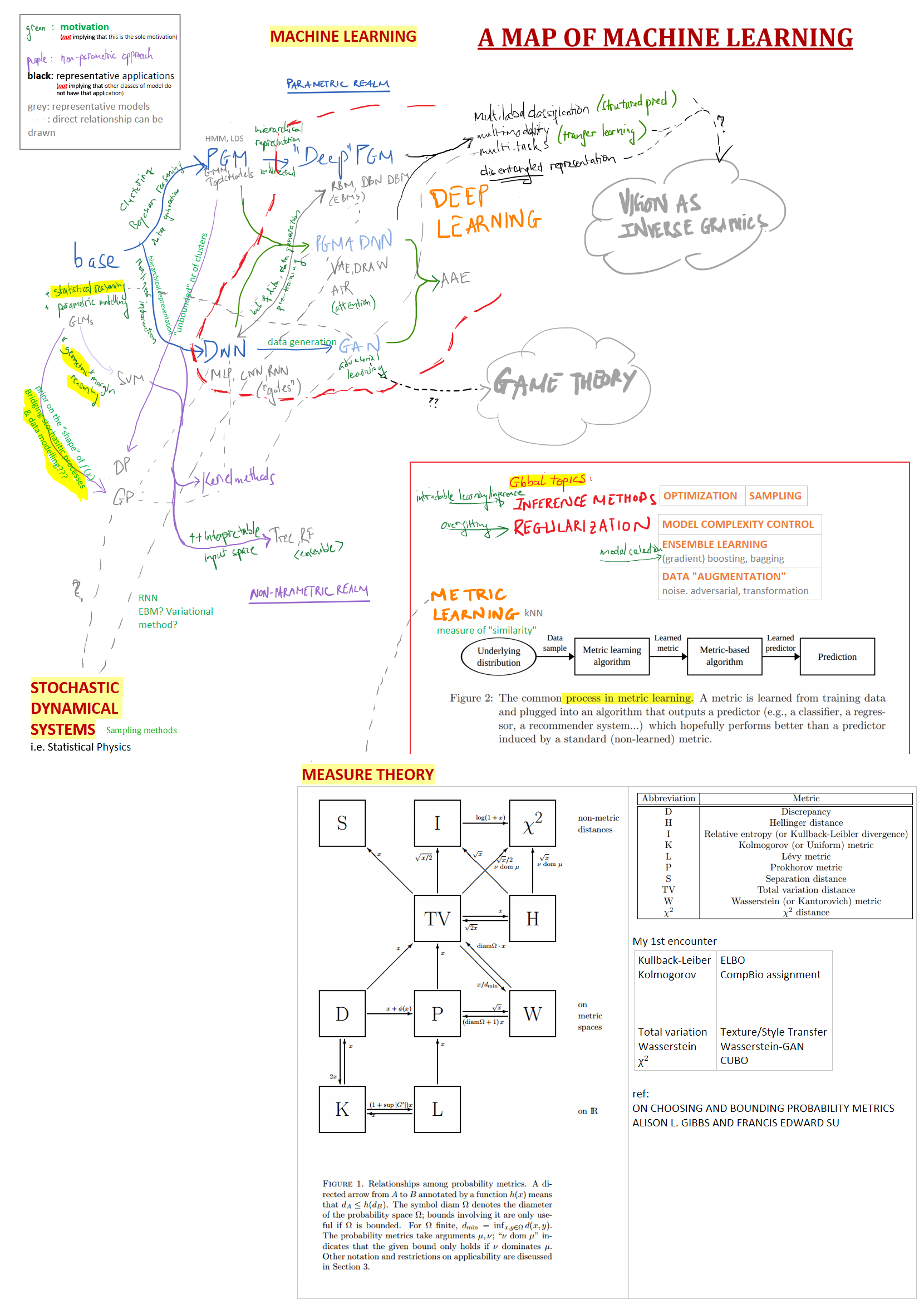

The mapMotivated by metacademy's philosophy below is one of many possible projections of the field Machine Learning, with focus on Deep learning and Bayesian modelling.

This map focuses on model classes & connection to other fields of study. In addition, the map also aims to devise a more principled study-plan to make it easier for aspiring ML self-learners to step-up/transit from basic ML or DNN to PGM, given that they had covered the “Base” course.

Disclaimer: The map was designed accordingly to my self-study/reading/working via MOOCs/papers/projects related to statistical machine learning, thus there might exist some (hopefully minor) inaccuracies. Any suggestions and critics to improve this map are highly appreciated.

“Gates” in the figure refers to “gating mechanism” presented in LSTM / GRU modules. See ML Appendix for description of the other acronyms. For a more comprehensive picture/maps of model classes, see Shakir Mohamed's talk.

The study-plan can be read directly from the model classes i.e. the nodes in the map. The expected contentsNote: From my experience, working on non-trivial research / practical projects with a well-defined topic and scope helps cover a lot of materials while making the theory less daunting. It is provided that you have the minimum foundation on the prerequisites though, which varies depending on the projects. covered in each node is summarized in the list below, in which the references are chosen so that their technical details have as small overlap with the references in other nodes as possible. The objective is to optimize the time spent on learning, while covering wide enough spectrum of ML research. Also, the further downn we go on the map, the more advanced materials in Global topics we will cover.

Disclaimer. The following references are subject to my current expertise (which obviously has lots of room to improve). Any suggestions for better alternatives and for missing nodes in the study-plan are welcome. Also, metacademy is an excellent source to look for (in-depth) references if you want to learn about a new ML concept.

- base : as Intended Learning Objectives above

refs: base-notes, base-ref

- PGM (all stochastic nodesNodes of the respective graphical model, not the study-plan ) : Intro. to Latent Variable Models + Bayesian inference methods (Variational methods, MCMC sampling e.g. Gibbs sampling) + Sparse regularization + (optional) convex optimization

refs: as in “base” course’s Recap slides, optionally incl. HMM, LDS for modelling sequential data. Further reading: Daphne Koller’s course

- DNN (all deterministic nodesNodes of the respective graphical model, not the study-plan ) : “Architectures” + Applications of modern DNNs to CompVis, NLP + Attention mechanism + (optional) non-convex optimization

refs: CS231n, CS224d. Further reading: Nando de Freitas’s course @ Oxford, Deep Learning textbook

- “Deep” PGM : Undirected PGM + More MCMC sampling methods (e.g. CD-k)

refs: TODO - Daphne Koller’s course (undirected PGM lectures), last-half of Geoffrey Hinton’s NN course, and/or Ruslan Salakhutdinov’s tutorials in KDD 2011/2014 (?)

- PGM+DNN : PGM whose stochastic nodes are parametrized by DNNs + Monte Carlo’s SGD-based Variational Inference + Structured priors i.e. Structured latent factors (incl. probabilistic attention mechanism)

refs: Shakir Mohamed’s talk for the big picture + (huge body of) relevant works, especially VAE and variants.

- GAN : Adversarial learning + Optimize (minimax.) an objective function and related issues (e.g. mode collapsing) + Insight to Game Theory research (?)

refs: TODO - Ian Goodfellow et al’s original paper (obviously) + an up-to-date GAN survey (?)

- [TODO - other topics not mentioned]

refs: distribute materials in pending reading lists to appropriate nodes Metacademy’s Bayesian ML roadmap Dr Truyen Tran’s 3-part tutorial @ AusDM’16 + Exhaustive list of resources Ferenc Huszar’s posts, e.g. Deep Learning is easy; probabilistic interpretation of DAE

- [TODO - …]